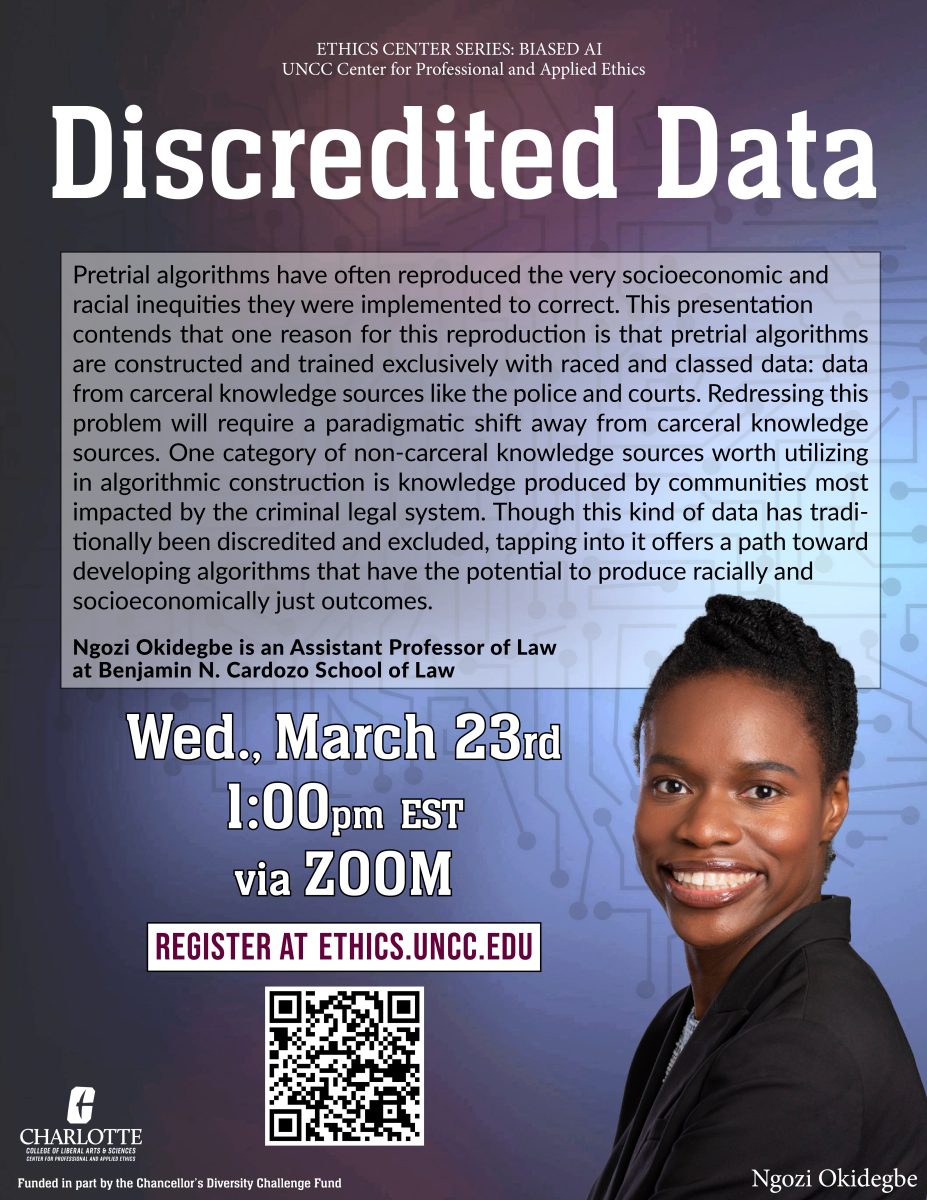

NGOZI OKIDEGBE, “DISCREDITED DATA”

March 23, 1:00 ET: Ngozi Okidegbe (Asst. Prof. Law, Cardozo School of Law), presents as part of our series on Bias in AI, on “Discredited Data”

–> Zoom Registration: https://uncc.zoom.us/meeting/register/tJMoceytrzwoHdQZtSi0WBPMkpdQVkH_5lYC

Abstract: Jurisdictions are increasingly employing pretrial algorithms as a solution to the racial and socioeconomic inequities in the bail system. But in practice, pretrial algorithms have reproduced the very inequities they were intended to correct. Scholars have diagnosed this problem as the biased data problem: pretrial algorithms generate racially and socioeconomically biased predictions, because they are constructed and trained with biased data. This presentation contends that biased data is not the sole cause of algorithmic discrimination. Another reason pretrial algorithms produce biased results is that they are exclusively built and trained with data from carceral knowledge sources – the police, pretrial services agencies, and the court system. Redressing this problem will require a paradigmatic shift away from carceral knowledge sources toward non-carceral knowledge sources. This presentation explores knowledge produced by communities most impacted by the criminal legal system (“community knowledge sources”) as one category of non-carceral knowledge sources worth utilizing. Though data derived from community knowledge sources have traditionally been discredited and excluded in the construction of pretrial algorithms, tapping into them offers a path toward developing algorithms that have the potential to produce racially and socioeconomically just outcomes.

About the series: Artificial Intelligence (AI) systems are poised to offer potentially revolutionary changes to fields as diverse as healthcare and traffic systems. However, there is a growing concern both that deployment of AI systems is increasing social power asymmetries and that ethical attention to those asymmetries requires going beyond technical solutions and incorporating research on unequal social structures. Because AI systems are embedded in social systems, technical solutions to bias need to be contextualized in their interaction with those larger systems. This series explores problems and solutions in making AI more just. Earlier talks by Ben Green, Serena Wang and Alex Hanna are archived on the Center’s YouTube Channel.